I studied AI, and eventually became an OpenAI researcher

Not long ago, I attended an AI meetup in Shanghai.

The event focused heavily on real-world AI applications.

What stood out most to me, however, was a learning strategy shared by a veteran investor.

He explained that this method not only saved his career but also transformed how he evaluates people as an investor.

So, what is it? It’s mastering the art of asking questions.

Whenever you’re curious about a subject, engage with DeepSeek. Keep probing—ask questions until it can no longer provide answers.

This “infinite questioning” approach struck me as profound at the time, but after the event, I quickly forgot about it.

I didn’t try it, nor did I dwell on it.

It wasn’t until recently, when I came across Gabriel Petersson’s story—how he dropped out of school and used AI to learn his way into OpenAI—that I realized the true significance of “asking until the end” in the AI era.

Gabriel Interview Podcast | Source: YouTube

From High School Dropout to OpenAI Researcher: An Unlikely Journey

Gabriel hails from Sweden and left high school before graduating.

Gabriel’s Social Media Profile | Source: X

He used to believe he simply wasn’t smart enough for a career in AI.

Everything changed a few years ago.

His cousin launched a startup in Stockholm, building an e-commerce product recommendation system, and invited Gabriel to join the team.

Gabriel accepted, despite having no technical background or savings. In the early days, he even spent a full year sleeping on the office couch.

But that year was transformative. He didn’t learn in a classroom—he learned under pressure, solving real-world problems: programming, sales, and system integration.

To further accelerate his growth, he became a contractor, giving himself the flexibility to choose projects, collaborate with top engineers, and proactively seek feedback.

When applying for a US visa, he faced a dilemma: this type of visa requires proof of “extraordinary ability,” typically demonstrated by academic publications and citations.

How could a high school dropout possibly provide that?

Gabriel devised a solution: he compiled his top technical posts from developer communities as alternative “scholarly contributions.” Surprisingly, immigration authorities accepted this.

After relocating to San Francisco, he continued to self-study math and machine learning using ChatGPT.

Today, Gabriel is a research scientist at OpenAI, contributing to the development of the Sora video model.

At this point, you’re probably wondering—how did he pull this off?

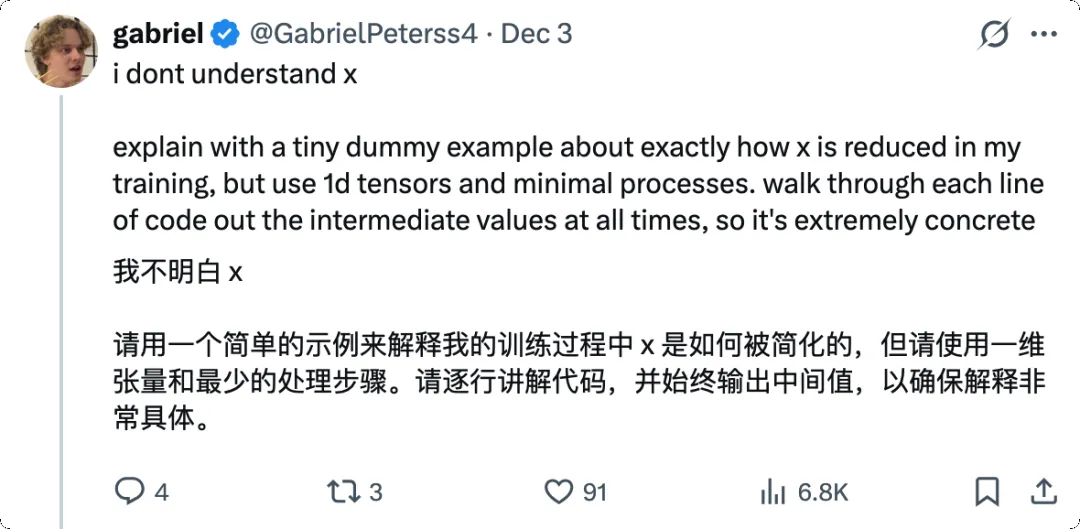

Gabriel’s Insights | Source: X

Recursive Knowledge Filling: A Counterintuitive Approach to Learning

The answer is “infinite questioning”: select a concrete problem and leverage AI to fully resolve it.

Gabriel’s learning strategy runs counter to most people’s intuition.

Traditionally, learning is “bottom-up”: you build a foundation first, then move to practical applications. For example, to study machine learning, you’d first learn linear algebra, probability theory, and calculus, then statistical learning, then deep learning, and only much later tackle real projects. This process can take years.

His approach is “top-down”: start with a specific project, solve problems as they arise, and fill knowledge gaps on demand.

As he explained on a podcast, this method was hard to scale in the past—you needed an all-knowing teacher who could tell you what to learn next at any moment.

Now, ChatGPT fills that role.

Gabriel’s Insights | Source: X

How does this work in practice? He gave an example: learning diffusion models.

Step one: start with the big picture. He asks ChatGPT, “I want to learn about video models—what’s the core concept?” The AI responds: autoencoders.

Step two: code first. He asks ChatGPT to write a diffusion model code snippet. He doesn’t understand much at first, but that’s fine—he runs the code anyway. If it works, he has a foundation for debugging.

Step three, and most critically: recursive questioning. He examines every module in the code and interrogates each one.

He drills down layer by layer until he fully grasps the underlying logic, then returns to the previous level to continue with the next module.

He calls this process “recursive knowledge filling.”

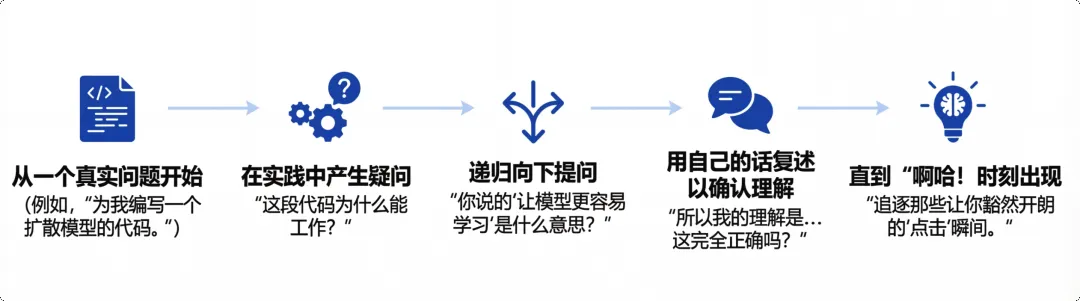

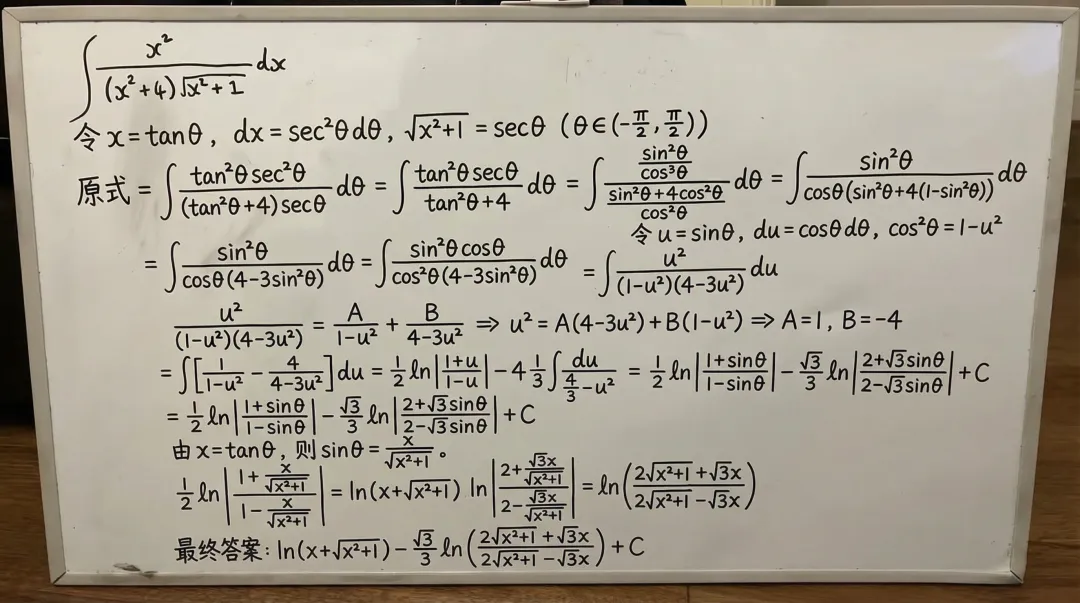

Recursive Knowledge Filling | Source: nanobaba2

This approach is far faster than spending six years on step-by-step study—you might develop foundational intuition in just three days.

If you’re familiar with the Socratic method, you’ll recognize the same principle: you approach the heart of a topic through relentless questioning, with each answer becoming the starting point for the next question.

The difference now is that AI is the one being questioned. And because AI is nearly omniscient, it can continuously explain the essence of things in accessible terms.

In essence, Gabriel uses this approach to extract the core of knowledge from AI—and truly understand the subject.

Most People Are Getting Dumber Using AI

After listening to the podcast, Gabriel’s story made me wonder:

Why do some people, like him, use AI to learn so effectively, while many others feel they’re regressing?

It’s not just my personal impression.

A 2025 Microsoft Research paper [1] shows that frequent use of generative AI leads to a significant decline in critical thinking skills.

In other words, we’re outsourcing our thinking to AI, and our own cognitive abilities are atrophying as a result.

Skill development follows the “use it or lose it” principle: when we use AI to write code, our own ability to code quietly declines.

Working with AI in a “vibe coding” style may seem efficient, but in the long run, programmers’ actual coding skills erode.

You hand your requirements to AI, it generates code, you run it, and it feels great. But if you have to turn off the AI and write core logic yourself, many people find their minds go blank.

Even more striking are findings from medicine. One study [2] found that doctors’ colonoscopy detection skills dropped by 6% after three months of using AI assistance.

That may sound minor, but consider: this is real clinical diagnostic capability that impacts patient health and lives.

So, the question is: why do some people get stronger using the same tool, while others get weaker?

The difference is in how you use AI.

If you treat AI as a tool to do the work for you—writing code, drafting articles, making decisions—your skills will atrophy. You skip the thinking process and only receive the result. Results can be copied and pasted, but critical thinking doesn’t grow on its own.

But if you treat AI as a coach or mentor—using it to test your understanding, probe your blind spots, and force yourself to clarify vague concepts—you’re actually accelerating your learning with AI.

The essence of Gabriel’s method is not “let AI learn for me,” but “let AI learn with me.” He is always the active questioner, and AI simply provides feedback and material. Every “why” is his own, every layer of understanding is something he unearths himself.

This reminds me of the saying: “Give a man a fish, and you feed him for a day; teach a man to fish, and you feed him for a lifetime.”

Recursive Knowledge Filling | Source: nanobaba2

Practical Takeaways

You might be wondering: I’m not an AI researcher or a programmer—how does this method apply to me?

I believe Gabriel’s approach can be generalized into a five-step framework anyone can use to learn any unfamiliar field with AI.

1. Start with real problems—not chapter one of a textbook.

Dive in directly. When you get stuck, fill the gap as needed.

This way, your knowledge has context and purpose, making it much more effective than memorizing isolated facts.

Gabriel’s Insights | Source: X

2. Treat AI as an infinitely patient mentor.

You can ask any question, no matter how basic. Have it explain concepts in multiple ways, or “explain it like I’m five.”

It won’t judge or get impatient.

3. Keep asking until you build intuition. Don’t settle for surface-level understanding.

Can you explain a concept in your own words? Can you give an example not mentioned in the original source?

Can you teach it to a layperson? If not, keep asking.

4. Beware: AI can hallucinate.

When you recursively question, if AI gets a core concept wrong, you could end up further from the truth.

So, at key points, cross-validate with multiple AIs to ensure your foundation is solid.

5. Document your questioning process.

This creates a reusable knowledge asset. Next time you face a similar problem, you have a complete thought process to review.

Traditionally, tools are valued for reducing friction and boosting efficiency.

But when it comes to learning, the opposite is true: moderate friction and necessary obstacles are prerequisites for real learning. If everything is too smooth, your brain shifts into energy-saving mode and nothing sticks.

Gabriel’s recursive questioning deliberately creates friction.

He keeps asking why, pushing himself to the edge of his understanding, and then gradually fills in the gaps.

This process is uncomfortable, but it’s exactly this discomfort that enables true long-term memory.

The Future of Work: Multi-Skilled Specialists

In today’s world, academic credential monopolies are fading, but cognitive barriers are quietly rising.

Most people treat AI as an “answer generator,” but a select few, like Gabriel, use it as a “thinking trainer.”

Similar techniques are already emerging across industries.

For example, on Jike, many parents use nanobanana to help their children with homework. But instead of letting AI give the answer, they have it generate step-by-step solutions, analyze each step, and discuss the logic with their kids.

This way, children learn not just the answer, but the method for solving problems.

Prompt: “Solve the given integral and write the complete solution on the whiteboard” | Source: nanobaba2

Others use Listenhub or NotebookLM to turn long articles or papers into podcast-style dialogues between two AI voices, explaining, questioning, and discussing. Some see this as laziness, but others find that listening to the dialogue and then reading the original text actually improves comprehension.

Because during the dialogue, questions naturally arise, forcing you to consider: do I really understand this point?

Gabriel Interview Podcast Converted to Podcast | Source: notebooklm

This signals a future trend: the rise of multi-skilled specialists.

In the past, building a product required knowledge of front-end, back-end, design, operations, and marketing. Now, like Gabriel, you can use the “recursive gap-filling” method to quickly master 80% of what you lack in any area.

If you started as a programmer, AI can help you fill gaps in design and business logic, turning you into a product manager.

If you were a strong content creator, AI can quickly help you develop coding skills and become an independent developer.

Given this trend, we may see more “one-person companies” in the future.

Take Back Control of Your Learning

Reflecting on that investor’s advice, I finally understand his real message.

“Keep asking until there are no more answers.”

This is a powerful mindset in the AI era.

If we settle for the first answer AI provides, we’re quietly regressing.

But if we keep probing, push AI to clarify its logic, and internalize that understanding, then AI becomes our extension—not our replacement.

Don’t let ChatGPT think for you—make it think with you.

Gabriel went from a high school dropout sleeping on a couch to an OpenAI researcher.

There’s no secret—just relentless questioning, thousands of times over.

In an era where AI replacement anxiety runs high, perhaps the most practical weapon is this:

Don’t settle for the first answer. Keep asking.

Statement:

- This article is republished from [geekpark], with copyright belonging to the original author [Jin Guanghao]. If you have concerns about this reprint, please contact the Gate Learn team, and we will address your request promptly in accordance with relevant procedures.

- Disclaimer: The views and opinions expressed in this article are those of the author alone and do not constitute investment advice.

- Other language versions of this article are translated by the Gate Learn team. Unless Gate is cited, do not copy, distribute, or plagiarize the translated article.

Related Articles

Arweave: Capturing Market Opportunity with AO Computer

The Upcoming AO Token: Potentially the Ultimate Solution for On-Chain AI Agents

AI Agents in DeFi: Redefining Crypto as We Know It

What is AIXBT by Virtuals? All You Need to Know About AIXBT

Understanding Sentient AGI: The Community-built Open AGI